If you spend any time on the internet, you probably have heard of Dave Portnoy. The founder of Barstool Sports, he’s also a prolific pizza reviewer. Since 2013, Portnoy has reviewed nearly 2,000 pizzerias and his videos regularly gain hundreds of thousands of views.

Portnoy’s ‘One Bite’ reviews can also transform the fate of small businesses. Last year, Portnoy gave the obscure West Palm Beach pizzeria Ah-Beetz a “monster score” of an 8.4. Today, Ah-Beetz still sees long lines and is opening four new locations to keep up with demand.

I’m a longtime viewer of Portnoy’s reviews. The videos are entertaining and I use them to find good pizza. Recently, I’ve noticed a growing sentiment amongst the Youtube comments – Portnoy’s scores have gotten higher. Dave used to issue “real” scores back in the day. Now? He’s a lot more generous and giving mostly 7’s.

While it’s best to ignore Youtube comments, they made me curious: is Portnoy’s giving higher scores?

Suppose his scores have become more generous. Today’s 7.1 is last year’s 6.8. For pizzerias with older reviews, it means they’d be unfairly compared. There could be hundreds of great restaurants overlooked simply because their review was done years ago.

In this article, I analyzed Portnoy’s scoring consistency to see if his scores had become more generous over time.

Collecting the data

Before I could start, I needed his scores. To my surprise, I could not find an existing dataset. So, I went to the source: the OneBite website. After reviewing the Terms & Conditions, I wrote a Python script to collect the following information for every review:

- Pizzeria Name

- Address

- Portnoy’s Score

- OneBite Community Score

- Number of Community Reviews

- Dave’s Review Date

Once I had this dataset, I removed reviews from 2013 through 2015 and 2024 because they had less than 50 reviews or were an incomplete year. Then, I added a ‘Score Difference’ column which measured the difference between the Community Score and Portnoy’s Score.

Are Portnoy’s scores getting higher?

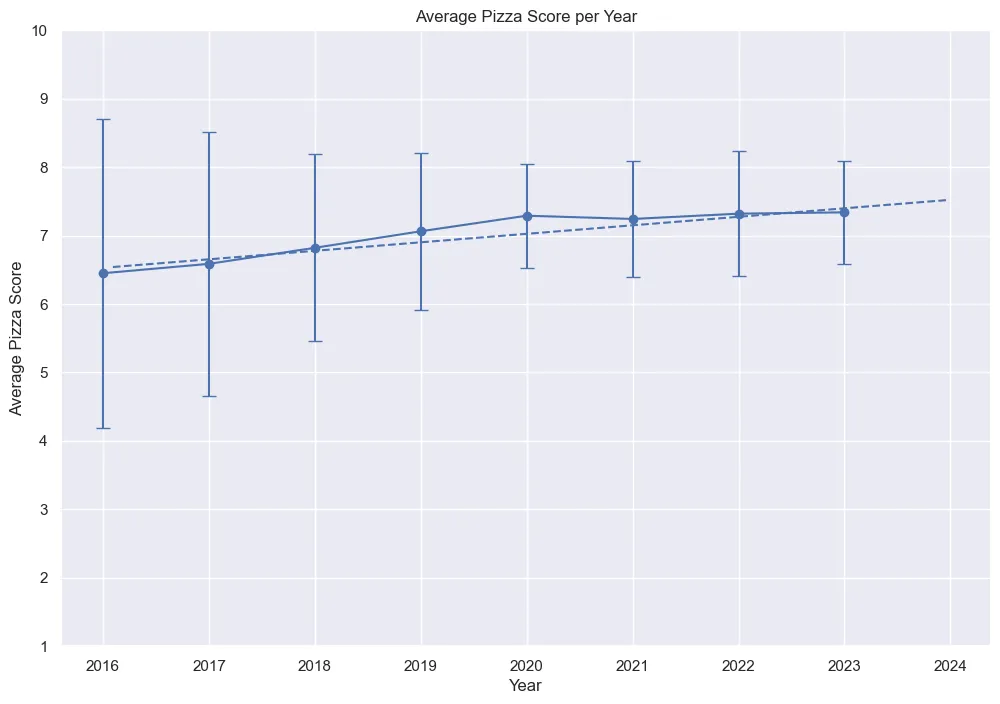

My first step was determining if Portnoy’s scores changed over time. Plot 1 displays Portnoy’s average score per year, along with error bars. The larger the error bar, the more frequent extreme values, like 2’s or 9’s, occurred that year.

Indeed, Portnoy’s average score increased. The average score in 2023 was nearly 14% higher than the 2016 average score. Additionally, there was a noticeable difference in error bar’s length before and after 2020. When comparing key statistics, this post-2020 shift became clear:

| 2016-2019 | 2020-2023 | Change (%) | |

|---|---|---|---|

| Average Score | 6.82 | 7.30 | +7.03% |

| Standard Deviation | 1.57 | 0.82 | -47.77% |

| Variance | 2.46 | 0.68 | -72.36% |

| Most Common Score | 6.7 | 7.3 | +8.96% |

It appeared that, yes, Portnoy was scoring pizzas differently: since 2020, he’d been giving out higher and less extreme (variable) scores. Case closed?

Not so fast. The shift in variability made me suspicious. Even considering the pandemic, I expected to see the variance return to its pre-2020 level by 2023. But that didn’t happen. Something was making Portnoy’s scores higher and less extreme.

Before I could claim Portnoy had become more generous, I needed to figure out what was causing this shift.

What caused Portnoy’s scoring shift?

I started by watching his videos to find evidence of the shift’s cause. Over the course of a week, I watched 120 reviews and took notes on what I observed.

The videos told an interesting story. Most reviews between 2016 and 2017 were located in NYC. Occasionally, Portnoy alluded to how he found the spot. Generally, this was via a friend, an internet comment, or sometimes it appeared like the pizzeria was just along the way to where he was going. Essentially, it felt random.

Contrast that with his post-2020 reviews. New York City reviews became less common, replaced with trips to different states like Florida or areas known for their pizza like New Haven. In several videos, Portnoy would ask his assistant how he found the pizzeria, to which he’d often answer it was through the One Bite App.

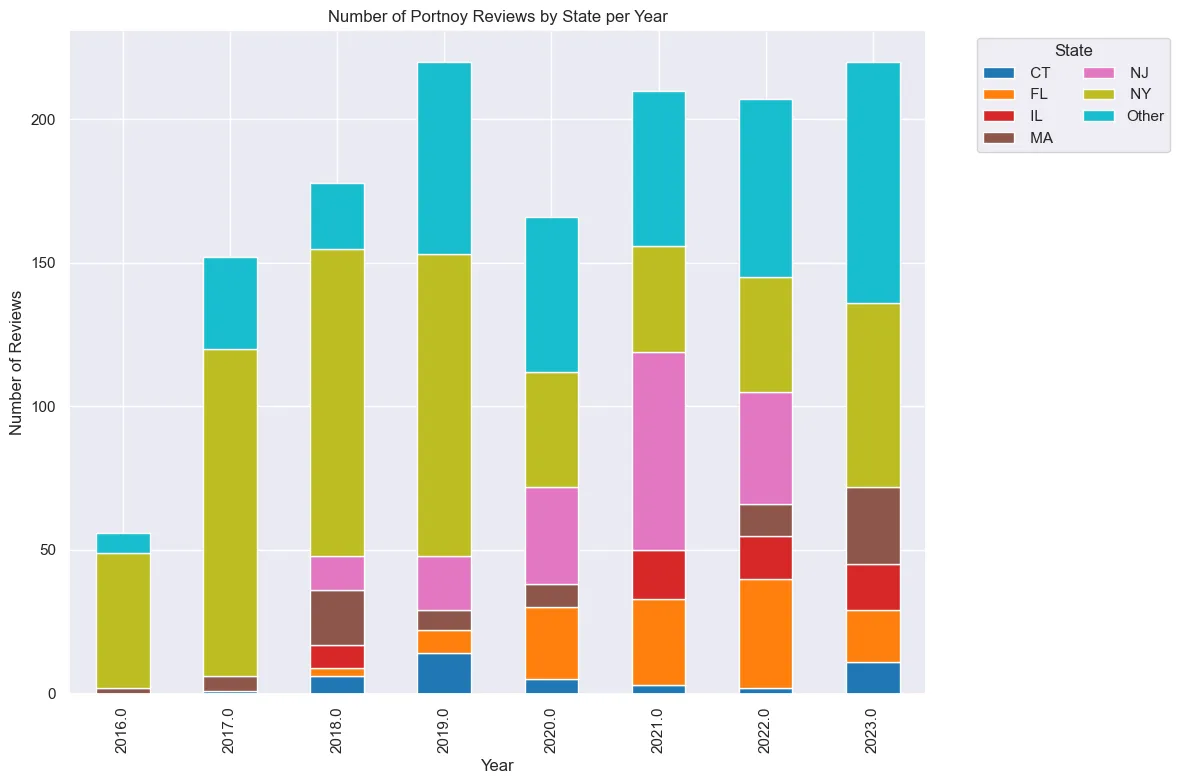

I wanted to verify if this shift in review location may have affected the average score. Plot 2 breaks down reviews by their location per year.

Sure enough, review location closely correlated with the observed shift in average score. Between 2016 and 2019, the majority of reviews were in NYC. After 2020, the share of NYC-based reviews halved. Meanwhile, states like Florida and New Jersey saw increases in their share of reviews.

Does this mean Florida and New Jersey have better pizza than New York? No, at least I don’t think so. Rather, I suspect it points to a selection bias.

Selection Bias is caused by the way sample data is selected from a population. If a sample is not randomly selected, it can create trends that lead to inaccurate conclusions. In a perfect world, Portnoy would select pizzerias randomly to help control for factors that might influence the score. As observed in the videos, the selection process in earlier years appeared to be more random than today’s. Based on the videos I watched, here’s how I believe Portnoy’s selection currently works:

It seems to begin with Portnoy taking a trip, perhaps to Florida or Nantucket. Dave may be less familiar with the pizza options in these areas and, as his popularity has grown, wants to focus on trying only the “best” places. So Portnoy tasks his assistant to use the OneBite App and select pizzerias with the highest Community Score. Therefore, the score increase observed in Plot 1 isn’t necessarily from Portnoy being more generous or ‘being off his game’. Instead, his assistant is picking more highly rated pizzerias.

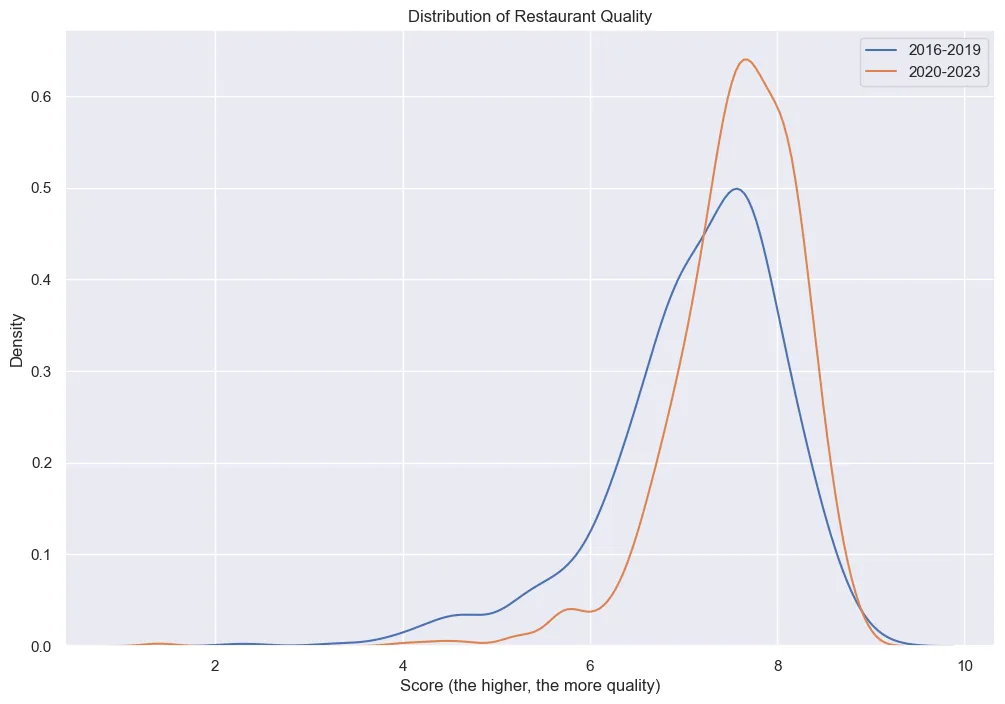

This assumption is supported by Plot 3, which shows the distribution of pizzeria Community Scores for 2016-2019 and 2020-2023. The post-2020 period had a higher concentration, or density, of restaurants with higher Community Scores whereas 2016-2019 had less and was more spread out.

So, are Portnoy’s Scores consistent?

With the change in how Portnoy selects pizzerias affecting the average, I couldn’t rely on it to determine if Portnoy’s had become more generous. To detect this, I needed to compare his scores with a consistent benchmark. Thankfully, there was the Community Score. The Community Score aggregated multiple individual scores taken overtime into one average. Therefore, the effect of an individual’s biases are lessened, and I felt it provided a sufficient benchmark.

I could compare the Community Score with its Portnoy Score using the Score Difference. If Portnoy had remained consistent over time, I would expect little to no change in the Score Difference. However, if he had become more generous over time, then I’d expect to see the Score Difference steadily increasing.

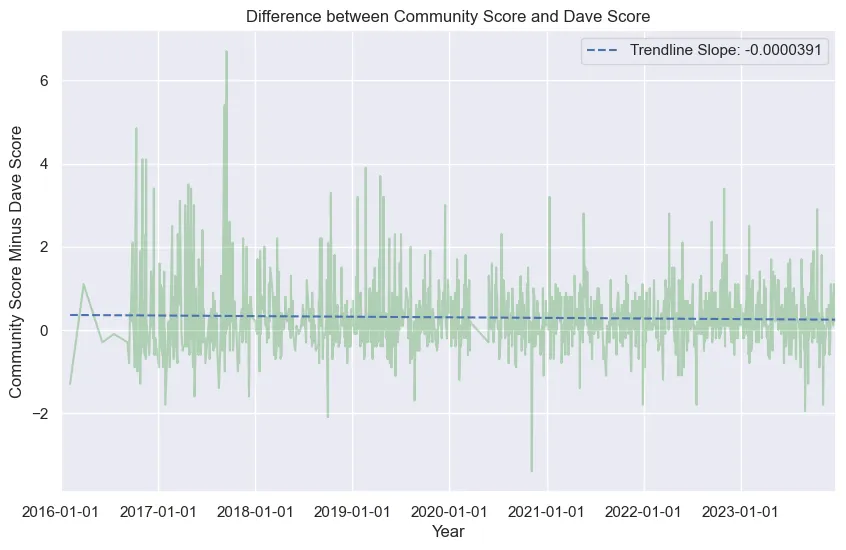

Plot 4 shows a line plot of the score difference for each review. To measure changes in the Score Difference, I included a trendline. A positive slope would indicate Portnoy is becoming more generous, while a negative slope would suggest he is becoming less so.

The trendline is effectively flat, hovering around Score Difference of 0.50. This flat slope suggests Portnoy’s scoring is remarkably consistent, despite changes in how pizzerias are selected.

Conclusion

Dave Portnoy has been reviewing pizza almost every day since 2013. In this analysis, I showed that perceived increases in Portnoy’s scoring likely stemmed from the way he and his team picked spots to review, not due to Portnoy becoming more generous with his scores. In fact, Portnoy’s Scores demonstrated impressive consistency over time when compared with the Community Scores.

This is good news for OneBite users and pizzerias alike. While you may not agree with Portnoy’s taste, you can be more confident that a Portnoy 7.1 pizza reviewed in 2016 should be comparable to a 7.1 reviewed in 2023.

Appendix A: Resources

Appendix B: Community Score Note

In this analysis, I used the Community Score as a benchmark. You might ask, “what if the Community Score had also become more generous over time?” and you’d be right to ask that question. This analysis assumed Community Scores remained consistent and did not experience any inflation over time. Here are two reasons that I believe justify this assumption:

- Composite Nature: The Community Score is an average of multiple individual scores. This helps reduce the influence or bias of any specific individual.

- Temporal Spread: The individual scores that make up the Community Score are spread out over time. This helps control for temporal changes in the restaurant’s quality.

Appendix C: Stats stuff for nerds

I made a few decisions in this analysis I’d like to explain for those interested in my approach.

- Error Bars: In Plot 1, I opted to use standard deviation to create my error bars instead of standard error. I wanted to emphasize the variability in pizza scores and standard deviation displays this more than standard error.

- Mean instead of Median: Median is often a better descriptive statistic than mean because it is less affected by outliers. However, like the error bars, I wanted to demonstrate score data was highly variable and the mean plot made that obvious. Second, people see mean more than median and have a better intuition of what it means.

- Yearly Mean v. Monthly: Plot 1 measured the annual mean. I decided to use Year as the aggregate to keep the plot clean. However, in my preliminary analysis, I observed seasonality affected the monthly mean, so I might do a followup on this.

- Sampling: My analysis measured 1,410 reviews. This was less than the 1,737 reviews Dave had completed by the time I pulled the data (7/22/2024). The difference is mostly from the removal of 2013-2015 and 2024 reviews, but my web-scraper did miss approximately 10% of reviews. This was due to how the scraper navigated the website. The scraper would search through each ‘fan favorites’ page, where it would search for pizzeria’s that had both a Portnoy and a Community Score. Since I couldn’t rank pizzerias by whether they had both scores, the scraper had to iterate through the thousands of pages. This took forever and had diminishing returns as the later pages had fewer pizzerias that fit my criteria. So, I stopped the scraping once I hit 90% of all reviews.